I put together a presentation for my Alma Mater, SUNY Oswego back in December of 2013 about what learning moments came to the foreground from the tornadoes earlier in the year during May. These tornadoes differed from just about all other outbreaks in that the same metropolitan area was struck on multiple different days. The Moore 2013 tornado, being the third violent tornado to strike that city in the past 15 years, exacerbated the sense of fear from these storms, and also set the stage for an unprecedented public reaction to yet another large tornado threatening the metro just days later. The closest analog to this kind of severe weather would be the multiple tornado outbreaks of May 2003 from Missouri to Oklahoma. What I present is somewhat biased in that it focuses on my worldview, however I've been influenced by many discussions about the May tornadoes and thus I've changed my view, consciously and otherwise. Many of these learning moments have been repeated with earlier major tornadoes in other parts of the country, and even here in Oklahoma from years past. Perhaps, however, with the tornadoes of 2013, and the heightened focus on the risk management of the residents in this state, this tornado outbreak helped focus these topics like a laser in our discussions. I'll reflect some of these in this series of pictures with captions taken from my presentation.

|

| Yes, central Oklahoma suffered in 2013. However, so many tornadoes in close proximity to a large meteorological center provides a great opportunity to learn from these disasters. Not everything learned is in this talk, but hopefully some essence of what is learned can be had from these pictures to follow. |

|

| This is an animated slide in the presentation highlighting the quality of forecasting has improved remarkably over the past years. When you see this level of risk where you live, take it very seriously. |

|

|

| The model guidance also is improving dramatically. Perhaps this forecast is a little too good to be true. That a 23 hour forecast from a convection allowing model can nail a supercell to within 3 mi of it actually occurring is incredible. |

|

| This is the storm that was forecast so well, I could've camped on Lake Thunderbird to wait for the tornado. If only I could depend on that more than once in a full moon. |

|

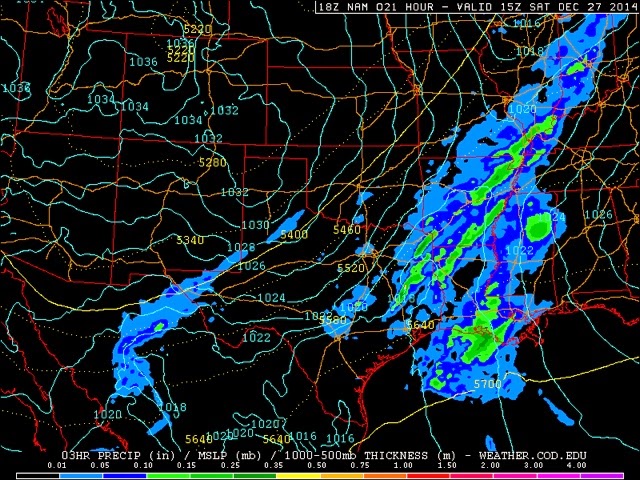

More typically convection allowing models yield usable forecasts with several hours to half a day lead time. Ensemble model systems can be helpful in determining the mode of severe weather. |

|

| That kind of guidance is valuable in helping the SPC issue not just tornado vs. severe watches, but what level of confidence to apply to each type. I put this slide in to show that not all tornado watches are equal. There is a lot of information about the likelihood of weak and strong tornadoes posted on the SPC web page. They were maxed out on the El Reno tornado day. |

|

| The NWS and the DOT worked together to make sure anyone passing under this, and other signs, knew of the impending severe weather. This message wouldn't be here without the collaborative forecasting done between the SPC and the local forecast offices in addition to the major NWS stakeholders. Note that the sign focuses on expected time of highest threat. |

|

The tweet has the same expected time of danger as the highway sign. This kind of messaging consistency is highly desirable. |

|

The NWS provided advice when watches were issued. |

|

Note this advice strongly encouraged people to travel with a destination in mind well before the warnings came out. |

|

And when the warning goes out, the NWS provided this advice. There is nothing in the advice at this late hour that discusses driving somewhere. |

|

| What was the reaction on the El Reno day? Not what was desired. |

|

Thousands of people took flight. Here are multiple reasons that resulted in this mass short-fused migration. I note the TV broadcasts were not solely at fault. It was also the heightened anxiety from the Moore tornado and the OKC culture, corrupted over years of fears that residents would not survive in their homes without going underground. |

|

| The panic was strong enough to overwhelm many driver's sense of responsible driving leading to this self-organized contraflow. However, the word 'organized' may not be the best term to apply when the underlying forces are caused by panic. |

|

| Almost forgotten amongst the populace was the flash flooding as numerous storms trained over the metro area after the lead El Reno tornado producing storm. The flooding potential was not a surprise, however. Numerical model guidance and forecasters highlighted the potential for flood producing rains and a flash flood watch was issued. In the Oklahoma culture, the tornado threat provokes images of fear leaving the flash flood threat in the dust of the collective conscience. |

|

Significant casualties occurred as a result of people fleeing from the tornado threat and into flood zones. Some people lost their lives when they hid in a drainage tunnel. |

|

What was the problem? It was the inconsistency of the messaging amongst members of the integrated warning team. This problem keeps being cited amongst various reports like the Joplin tornado report by the NIST or the NWS Service assessment from the 2011 tornadoes. Of all the places where consistent messaging should be most desired, it should be in the Oklahoma City area. |

|

| The mass evacuation on May 31 was not solely the responsibility of the media's messaging. The stage was already set by the sense of dread so dramatically acquired after the May 20 tornado hit Moore. NWS service assessment findings indicated many of those that evacuated felt compelled to leave, and yet then regretted that decision. Will that regret result in more appropriate reactions to a tornado warning in the future? Perhaps, or perhaps not. |

|

This is a complicated graphic but it's so revealing. Someone's risk is a function of his/her vulnerability and the intensity of the event that could potentially make him/her vulnerable. Consider that someone is much more vulnerable at a camp site, or in a small boat vs. an office building or a large ship. Certainly someone in a large building need not worry too much about a severe thunderstorm warning but someone in a tent should be understandably concerned. People need to understand their own risk management. Not the NWS, nor anyone else can do it for them because they vary so much. Instead warnings are issued with single threshold that cuts across a wide spectrum of people's vulnerability. They do the best they can but it won't be enough to account for everyone's vulnerability. But technology can help. Perhaps smartphone tools will come about to help interpret someone's risk management for them. Some tools are already in development, as I understand now. |

|

A big part of risk management is deciding what needs to be done with X amount of lead time, one of the major determinants of vulnerability. When residents had plenty of time, a larger proportion of them decided to flee. Others decided they had time to gather important belongings, and get more information. |

|

Fleeing by vehicle during a warning may seem like good risk management but I doubt many people made any adjustments to their sense of allowable time when everyone wanted to flee. I'm confident that panic resulted in many drivers once they felt like they were taking too much time relative to their perceived sense of acceptable lead time. And they would be right should the storm have produced another large tornado in the metro. |

|

Had the parent storm of the El Reno tornado decided to put down another one of those tornadoes, multiply this picture by 100s and that's what could've been a plausible scenario of poor risk management on a massive scale. This is an example of super high vulnerability. |

|

This strategy of these postings at OU could help steer people's risk management in the right direction. Perhaps more of them would stay at home. Most would survive, as past experience of violent tornadoes striking urban areas show. Only 0.6% of those struck by violent tornadic winds in homes didn't survive. But a higher percentage of people would be injured thanks to the quality of our housing. And nobody tracks the consequences of those injuries. |

|

High vulnerability lies in our collectively poor housing quality, maybe not as high as being in a vehicle but high nonetheless. |

|

There are exceptions, such as new neighborhoods along the Gulf or East Coasts built to modern hurricane codes. These houses near Lady Lakes, FL performed so well that it was tough to rate the tornado. Typically houses with shingle loss and cars blown around would not be seen together for typical construction. These houses are tough. |

|

Certainly shelters have been proven time and time again in OK with the onslaught of violent tornadoes in the past few years. More and more shelters are being installed in central OK and they have a long proven track record of saving lives. I heard over 200,000 of them have been installed in OK over the past few years. This is the ultimate of 'lowering your vulnerability' act. |

|

To help people manage their own risk, we need to give them better guidance. Thus our warning system needs to change. Recall the single threshold and lead-time paradigm today in the NWS. This will change. |

|

Part of that change will come about with better input available for the NWS forecaster to make better decisions. |

|

That's what the Hazardous Weather Testbed is all about. |

|

The HWT allows forecasters to try out new technologies, give feedback, and improve the input. |

|

Phased array radar is one of those new technologies that could revolutionize forecaster input. |

|

| Super-rapidly updating model guidance is another game changing input paradigm. But the technology will not be ready for some years if forecasters want new model guidance every several minutes as input into short-term warning updates. |

|

But such wonderful new technological input may seem lost when the warning output from the forecaster is 40 years old. We can do better. |

|

Maybe we already are. On the El Reno day 2013, the NWS OUN office was putting out information by the minute, much more frequently than the 40 year old warning model. This output went out via multiple channels each day of severe weather. |

|

In the future, warning output may allow warnings to follow storms. Thus no one place gets their lead-time shafted by discrete, static, polygon issuance. |

|

| Then there's FACETS, a project at NSSL to take the output to the next step where gridded probabilistic guidance by hazard can help inform people while they manage their risk. It'll take a lot of work to realize what form this output will take. But whatever form it takes, certainly institutions responsible for moving large numbers of people (e.g., large outdoor venues and hospitals) could use this guidance to begin preparations well before any legacy warning gets issued. |

|

Still, we go back to the quality of our housing. Risk management is a lot easier if our houses were built well enough to provide us some level of decent protection. It's not just health but also we've got to consider mitigation of lost productivity due to loss of property. |

|

It's major events like the Moore tornado 2013 that give us learning moments about how to build better. |

|

We learn by doing surveys. Aerial, even satellite imagery helps define areas of interest where we want to investigate further. This Aster instrument showed dramatically lowered vegetation health (healthy is red) along the tornado track. It even showed a kink in the damage path next to I-35 in Moore that was worth investigating some more. |

|

Aerial and ground surveys help us assess the damage intensity and its spatial extent. |

|

Our surveys were also assisted by radar data at first to help narrow down the damage swath. It was the PX1000 radar data run by the Advanced Radar Research Center that helped spot the small loop the tornado enacted near the Moore Medical Center. |

|

Several teams rated 4000 damage indicators. This segment was near the Plaza Towers Elementary School that was destroyed by the tornado and where 7 students were lost. This imagery is from the Google Crisis Response imagery. |

|

We find housing flaws that should not have happened. All homes in the neighborhood built ~2000 had their foundations swept completely clean while the neighborhood east of the school withstood the tornado long enough to have most walls down but debris remaining. How could an entire neighborhood, built in this day in age, perform worse than an older neighborhood? Home buyers need to become better customers and demand better from home builders. But it shouldn't stop there. Towns need to adopt better building codes, not after a disaster, but before they happen! |

|

School building construction performed poorly from the tornado. We know why and what should be done to mitigate this kind of performance. It's only through good risk management that the teachers managed to save as many kids as they did. They only need build proper reinforced CMU walls and connections to roof and foundation to save the rest. |

|

Many houses have no continuous load paths. This picture is a screaming example of what the lack of a continuous load path can do. Well, I guess someone sleeping in an upstairs bedroom would be OK in this picture but what a ride it would've been. |

|

One could build a steel reinforced concrete dome house for the ultimate in tornado resistance. But how many home buyers want to live in a home that looks like a space ship? |

|

| Yet the couple that were in the house at the time of the tornado were injured simply because they watched it come from the kitchen bar without taking further shelter in the bathroom. All interior rooms were completely intact with no sign of penetration of debris or dust without the need of a storm shelter. |

|

Well, we don't have to live in a space ship for added protection. It's simple to create a continuous load path in a house under construction. A $1000 worth of clips, J-bolts and metal straps, anchoring the roof all the way down to the foundation, is a lions share of what's needed. The rest comes from fortifying garage doors, windows, and adding cross-bracing through proper sheathing. None of this is rocket science and all of it is worth the price, especially when considering the costs of retrofitting a house after it's built. |

|

The labor might be a bit more, but what home buyer would turn this down if they knew how easy it is to do? This could save on a lot of damage. |

|

Look what strapping did for an apartment complex in Joplin. |

|

This apartment complex was in EF 2 winds yet did not suffer the level of damage commensurate with such winds for apartments of just standard construction. |

|

These houses also had a continuous load path with steel reinforced CMU walls and proper roof attachments. The only Achilles' heel for any of these buildings still are the windows. |

|

This office building in Woodward, OK would've done okay if it weren't for the windows inflating the building and blowing off the roof. Can more be done with windows? |

|

This hospital had laminated glass on one floor and none of it broke in the Joplin tornado. It's more expensive, just like a storm shelter. But who in Moore would complain? |

|

Part of building better is understanding how buildings perform in real tornadic winds. Guess what, tornadic winds don't follow straight lines. |

|

We've got a lot of assumptions about how buildings perform. Do they all really respond to a 10 m 3 second wind gust? Is that really the wind in a tornado? Do winds in a tornado environment always decrease in strength toward ground level as so many 'experts' assume? |

|

No! And in-situ measurements are showing it. This profile was taken during the Goshen, WY tornado during VORTEX2. The Tornado Intercept Vehicle sampled wind speeds at 3.5 m AGL (3 sec gust in red) considerably higher than the DOW radar beam at 30 m AGL (yellow). |

|

A lot of different assumptions have to be made when the peak horizontal wind is at 3 m above ground vs 30 m or even 300 m. It's time to throw the old thinking out. |

|

The forces upon buildings can be much stronger with a constant or stronger for a vertical profile of constant horizontal wind in a tornado environment than what conventional wisdom may have implied earlier with a typical profile where wind increases with height from 3 to 10 m and above. For winds increasing in strength toward 3 m, the forces would be stronger yet than what this graph shows. It also means that the winds sampled by mobile radar sampled somewhere above 10 m can be greatly underestimated. And we have not even discussed strong vertical wind components near ground |

|

Videos such as this one in Leighton, AL show compelling evidence that intense vertical winds begin below car top level. Wind engineers now realize this is true. Horizontal winds don't just pick up cars with no initial sliding or rolling. |

|

And they are doing something about it. This simulator in Iowa State already is providing wind engineers data about how much stronger tornado wind stresses are than winds from other storms. |

|

Mobile radar is also providing some compelling evidence that tornadoes are stronger than the damage-based climatology suggests in areas where there's not much to damage. |

|

| Not all the time do winds increase going from 10 to 3m. We still need a lot more data to determine what tornado behaviors are associated with a reverse wind profile, and also strong vertical flow near ground level observed when tornadoes exhibit strong corner flow. This information is critical. Assets like mobile radar and in-situ probes, whether by the TIV or by smaller probes, will need to sample tornado environments numerous times in order to acquire data from a variety of tornado behaviors in a variety of terrain. I encourage even those chasers willing to put armored vehicles in harms way to go the extra inch it would take to outfit their vehicles with research quality, and well sited, anemometers. The latter may sound controversial but if their bent on doing this, they could at least help provide the data to answer these questions. After the money spent on armoring vehicles, adding a research quality anemometer should be pretty trivial. Then we can produce more accurate models of building performance in tornado environments. * |

To recap, I put this talk out there to highlight some of the learning moments that struck a chord with me. I believe that the quality of severe weather forecasts has gone up considerably to the point where societal and communication issues dominate in any disaster. Getting people to react properly by learning good risk management is key. We've only scratched the surface in this respect and we can do so much more. I believe that any K-12 school curriculum should contain a required course on risk management, whether it's managing funds to managing safety. Now that's not to say that forecast improvements will help. They will. They will help as long as the method of communication keeps up with the forecast capabilities so that they continue to be useful.

But in order to help the public, and officials, manage their risk, the research community needs to continue to help the NWS change its warning paradigm. No longer should the NWS be the sole providers of warnings. They should also be providers of warning guidance so that all users can create their own warnings based on their own vulnerability. Fortunately the momentum behind the

FACETS program holds promise because it has grabbed the attention of NWS and NOAA management. Now it must grab the attention of other sectors, especially the private sector. They are the ones that can provide a broad variety tools to help users manage their risk.

Finally, let's do something about the built environment. I'm getting tired of seeing one

FEMA/

NIST/

NSF report after another talking about the inferior quality of our housing construction. Fortunately, after three violent tornadoes visiting the city of Moore, I hear that housing codes may be upgraded. The same has happened in Joplin, MO after their tornado. Now I only hope that one day a disaster isn't required for codes to be upgraded. Can we actually be proactive? Well, I'm not holding my breath. Sometimes, most of the time, it takes a consumer to want a better built house, to want better from their home builders. I don't know the answer to this except more vigorous education. Grab them when they're young, before they fall into the trap of valuing more frivolous furnishings like granite countertops above those things that could save their lives (e.g., shelters, sprinklers, continuous load paths). Yes, that's another call for education in the K-12 grades.

*

I do not assume any responsibility for the risk chasers put upon themselves should they decide to transect tornadoes with their armored, or otherwise, vehicles.